Understanding Iterative Fine-Tuning on Amazon Bedrock

As organizations are rapidly adopting generative AI technologies, challenges often arise with traditional single-shot fine-tuning methods. The traditional approach attempts to tweak AI models through a one-time training session, which can lead to disappointing outcomes and necessitates starting over if the results don't meet expectations. Amazon Bedrock addresses these issues with its innovative iterative fine-tuning feature, which facilitates ongoing model improvements in a structured and incremental manner.

Key Advantages of Iterative Fine-Tuning

One of the major advantages of this method is the risk mitigation it offers. By executing incremental improvements, developers can validate their modifications before broader implementation, resulting in data-driven optimizations based on real-world performance. This approach empowers developers to continuously refine model behavior as new data flows in, making adjustments that align with evolving business needs.

Step-by-Step Implementation for Developers

To get started with iterative fine-tuning, developers need to prepare their environment and existing custom models, all while understanding key prerequisites:

- Ensure you have standard IAM permissions for Amazon Bedrock model customization.

- Gather incremental training data that specifically targets relevant performance gaps.

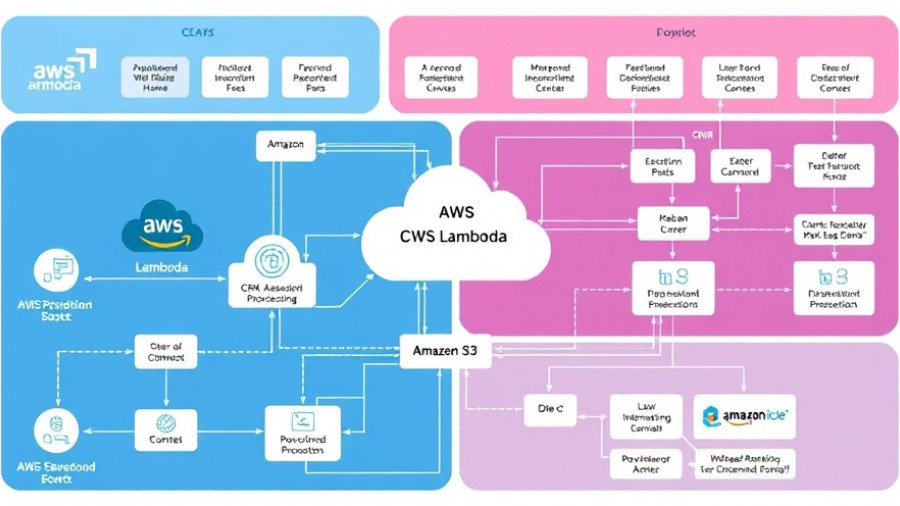

- Set up an S3 bucket for your training data and job outputs.

Through both the Amazon Bedrock console and programmatic SDK usage, developers can initiate fine-tuning jobs with ease. In the console, you begin by selecting your previously customized model as the base model, allowing you to oversee training job statuses and track performance metrics through intuitive charts.

Best Practices for Maximum Impact

Successful iterative fine-tuning hinges on several best practices. Quality should trump quantity when selecting training data, aiming for datasets that specifically address previously identified gaps. It's also critical to maintain evaluation consistency; by establishing baseline performance metrics, organizations can measure improvements meaningfully across iterations. This structured approach not only prevents wasted efforts but also clarifies when it's time to pause or conclude fine-tuning efforts.

Conclusion: The Path Forward for AI Development

In summary, iterative fine-tuning on Amazon Bedrock is a game-changing approach that showcases the potential for continuous improvement in AI models. Organizations can enhance their existing investments rather than being forced to start from scratch, making this method invaluable for forward-thinking developers and engineers. As you embrace Amazon Bedrock's iterative fine-tuning, you can keep pace with the rapid evolution of AI technologies and align your models with real-world requirements.

Add Row

Add Row  Add

Add

Write A Comment